- Stringology

- Combinatorial Puzzles

- 1: Stringologic proof of Fermat's little theorem

- 2: Simple case of codicity testing

- 3: Magic squares and Thue-Morse word

- 4: Oldenburger-Kolakoski sequence

- 5: Square-free game

- 6: Fibonacci words and Fibonacci numeration system

- 7: Wythoff's game and Fibonacci word

- 8: Distinct periodic words

- 9: A relative of Thue-Morse word

- 10: Thue-Morse words and sums of powers

- 11: Conjugates and rotations of words

- 12: Conjugate palindromes

- 13: Many words with many palindromes

- 14: Short superword of permutations

- 15: Short supersequence of permutations

- 16: Skolem words

- 17: Langford words

- 18: From Lyndon words to de Bruijn words

- Pattern Matching

- 19: Border table

- 20: Shortest covers

- 21: Short borders

- 22: Prefix table

- 23: Border table to Maximal suffix

- 24: Periodicity test

- 25: Strict borders

- 26: Delay of sequential string matching

- 27: Sparse matching automaton

- 28: Comparison-effective string matching

- 29: Strict border table of Fibonacci word

- 30: Words with singleton variables

- 31: Order-preserving patterns

- 32: Parameterised matching

- 33: Good-suffix table

- 34: Worst case of Boyer-Moore algorithm

- 35: Turbo-BM algorithm

- 36: String-matching with don't cares

- 37: Cyclic equivalence

- 38: Simple maximal suffix computation

- 39: Self-maximal words

- 40: Maximal suffix and its period

- 41: Critical position of a word

- 42: Periods of Lyndon word prefixes

- 43: Searching Zimin words

- 44: Searching irregular 2D-patterns

- Efficient Data Structure

- 45: List algorithm for shortest cover

- 46: Computing longest common prefixes

- 47: Suffix array to Suffix tree

- 48: Linear Suffix trie

- 49: Ternary search trie

- 50: Longest common factor of two words

- 51: Subsequence automaton

- 52: Codicity test

- 53: LPF table

- 54: Sorting suffixes of Thue-Morse words

- 55: Bare Suffix tree

- 56: Comparing suffixes of a Fibonacci word

- 57: Avoidability of binary words

- 58: Avoiding a set of words

- 59: Minimal unique factors

- 60: Minimal absent words

- 61: Greedy superstring

- 62: Shortest common superstring of short words

- 63: Counting factors by length

- 64: Counting factors covering a position

- 65: Longest common-parity factors

- 66: Word square-freeness with DBF

- 67: Generic words of factor equations

- 68: Searching an infinite word

- 69: Perfect words

- 70: Dense binary words

- 71: Factor oracle

- Regularities in Words

- 72: Three square prefixes

- 73: Tight bounds on occurrences of powers

- 74: Computing runs on general alphabets

- 75: Testing overlaps in a binary word

- 76: Overlap-free game

- 77: Anchored squares

- 78: Almost square-free words

- 79: Binary words with few squares

- 80: Building long square-free words

- 81: Testing morphism square-freeness

- 82: Number of square factors in labelled trees

- 83: Counting squares in combs in linear time

- 84: Cubic runs

- 85: Short square and local period

- 86: The number of runs

- 87: Computing runs on sorted alphabet

- 88: Periodicity and factor complexity

- 89: Periodicity of morphic words

- 90: Simple anti-powers

- 91: Palindromic concatenation of palindromes

- 92: Palindrome trees

- 93: Unavoidable patterns

- Text Compression

- 94: BW transform of Thue-Morse words

- 95: BW transform of balanced words

- 96: In-place BW transform

- 97: Lempel-Ziv factorisation

- 98: Lempel-Ziv-Welch decoding

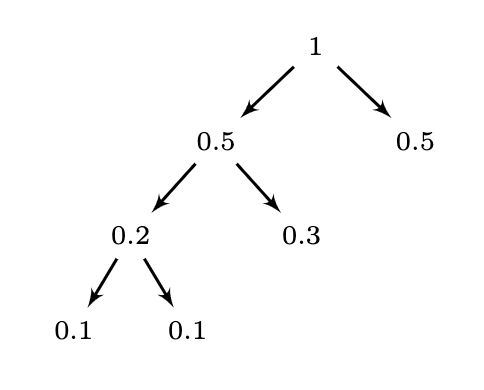

- 99: Cost of Huffman code

- 100: Length-limited Huffman coding

- 101: On-line Huffman coding

- 102: Run-length encoding

- 103: A compact Factor automaton

- 104: Compressed matching in Fibonacci word

- 105: Prediction by partial matching

- 106: Compressing Suffix arrays

- 107: Compression ratio of greedy superstrings

- Miscelleanous

- 108: Binary Pascal words

- 109: Self-reproducing words

- 110: Weights of factors

- 111: Letter-occurrence differences

- 112: Factoring with border-free prefixes

- 113: Primitivity test for unary extensions

- 114: Partially commutative alphabets

- 115: Greatest fixed-density necklace

- 116: Period-equivalent binary words

- 117: On-line generation of de Bruijn words

- 118: Recursive generation of de Bruijn words

- 119: Word equations with given lengths of variables

- 120: Diverse factors over a 3-letter alphabet

- 121: Longest increasing subsequence

- 122: Unavoidable sets via Lyndon words

- 123: Synchronising words

- 124: Safe-opening words

- 125: Superwords of shortened permutations

- Extra Problems

- 126: Computing short distinguishing subsequence

- 127: String attractors

- 128: Words with distinct cyclic k-factors

- 129: Shrinking a text by pairing adjacent symbols

- 130: Local periodicity lemma with one don't care symbol

- 131: Idempotent equivalence

- 132: 1-error correcting linear Hamming codes

- 133: Huffman codes vs entropy

- 134: Compressed pattern matching in Thue-Morse words

- 135: The words representing combinatorial generations

- 136: 2-anticovers

- 137: Short supersequence of shapes of permutations

- 138: Subsequence covers

- 139: Yet another application of suffix trees

- 140: Two longest subsequence problems

- 141: Two problems on run-length encoded words

- 142: Maximal number of (distinct) subsequences

- 143: Avoiding grasshopper repetitions

- 144: Counting unbordered words and relatives

- 145: Cartesian tree pattern-matching

- 146: List-constrained square-free strings

- 147: Superstrings of shapes of permutations

- 148: Linearly generated words and primitive polynomials

- 149: An application of linearly generated words

- 150: Text index for patterns with don't care symbols